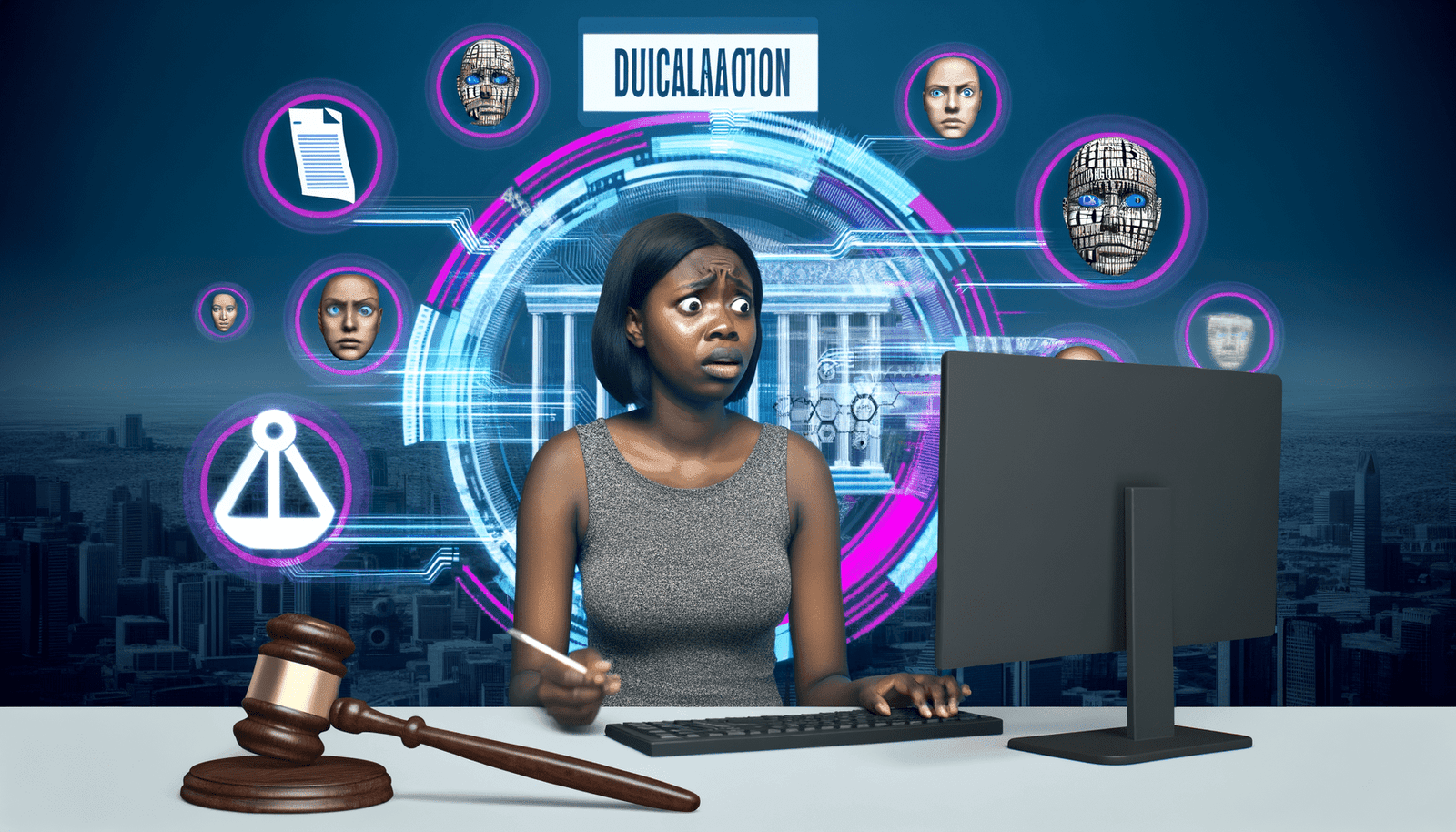

In a bold move, Minnesota’s Anti-Deepfake Law Sparks Explosive AI Controversy! The law aims to tackle the rise of political deepfakes, but recent developments have put its credibility in question. The plaintiffs challenging the law have submitted a filing urging the judge to withdraw a crucial declaration supporting it. Why? The document, crafted with assistance from ChatGPT, contained erroneous citations, as acknowledged by Stanford misinformation expert Jeff Hancock. This revelation raises critical concerns about the reliability of AI-generated content in legal contexts and the implications for regulations aimed at curbing misinformation.

Minnesota’s Anti-Deepfake Law: A New Frontier in AI Regulation

As we stride deeper into the murky waters of the digital age, Minnesota’s Anti-Deepfake Law has emerged as a bold attempt to regulate artificial intelligence technologies that threaten to manipulate reality. Aiming to combat the distressing rise of political deepfakes, the legislation was anticipated to provide a robust framework for tackling misinformation while safeguarding the integrity of public discourse. However, a recent tempest surrounding this law has ignited an explosive AI controversy that could redefine its enforcement and effectiveness.

The Anatomy of the Controversy

At the heart of this clash is a revelation that has sent ripple effects through legal, technological, and ethical discussions. Plaintiffs challenging the law have called for the withdrawal of a pivotal declaration — a document supporting the legislation that was flagged for using information generated by AI. This declaration, we now know, was assisted by ChatGPT, a popular AI language model developed by OpenAI. In a twist reminiscent of a courtroom drama, experts have pointed out that the document contained multiple erroneous citations, undermining its credibility.

Stanford expert on misinformation, Jeff Hancock, verified these critical findings, hinting at significant implications not only for the Minnesota law but for broader societal issues surrounding AI use in legal and regulatory frameworks. Should we trust AI-generated content? And what does its reliability mean for laws designed to protect us from misinformation?

The Rise of Deepfakes: A Digital Dilemma

To grasp the necessity of Minnesota’s Anti-Deepfake Law, we must first appreciate the enormous challenge posed by deepfakes. These digitally manipulated videos or audio recordings can alter reality, making it appear as though someone has said or done something they have not. Political figures have often been the victims of deepfakes, subjected to fabricated clips that can jeopardize their careers and reshape public perception in the blink of an eye.

In an era where misinformation spreads faster than wildfire, the legislation was intended to provide a shield against such manipulation. The law looks to impose punitive measures on those creating or distributing malicious deepfakes, specifically targeting political content that could mislead voters or manipulate electoral outcomes.

Legal Hurdles and Ethical Quagmires

Given Minnesota’s pioneering stance, one might have assumed the law would sail through unchallenged waters. However, as recent events have demonstrated, the river of legal discourse can be treacherous. The challenge aimed at the law involves pointing out the inherent conflicts between free speech, the First Amendment, and the government’s role in regulating content. Critics argue that the law could lead to censorship and overreach, stifling creativity and expression in a digital landscape that thrives on innovation.

Moreover, the emergence of AI technologies complicates matters further. On one hand, AI can empower content creation and open new avenues for artistic expression; on the other hand, it has the potential to augment the spread of malicious information. In this context, the importance of credible human oversight in drafting legal premises becomes paramount. It raises the question: if we start relying on AI to create the laws meant to govern society, how much can we trust those laws?

Is AI the Future or the Foe?

The usage of AI in creating legal documents touches on an even broader issue facing society: the dependency on AI-generated information, especially for crucial decisions. While technology offers significant advancements, it also comes with risks that can compromise the integrity of systems meant to protect and inform us. Legal experts and lawmakers alike must tread carefully when leveraging AI capabilities. A misstep can lead to unintended consequences, both in the tech world and society at large.

For example, to trust AI-composed documentation could lead to errors in policymaking, where inaccuracies undermine the foundation of the legal framework. In a world that increasingly looks to AI for solutions, recognizing its current limitations is essential. Just because a system can generate content does not mean it is immune to flaws.

Political and Social Implications

As the legal challenges around the Anti-Deepfake Law unfold, we must also consider the political landscape. The timing of these events coincides with significant electoral year in the U.S., amplifying tensions as candidates prepare to launch their campaigns. With the specter of deepfake technology looming large, any misleading video could skew perception and sway elections in unexpected ways.

In this light, the question of who controls narrative—politicians, tech companies, or the public—becomes ever more pressing. The law’s intention to safeguard democracy by combating misinformation must simultaneously navigate a chaotic environment where stakes are heightened. With political deepfakes threatening to proliferate, public trust in media and democracy is on the line, and the Minnesota law holds both promise and peril.

A Call for Human Oversight

The recent developments highlight a critical need for human oversight in the interplay between AI and law. Ultimately, it is people who must make decisions, assess information’s validity, and consider the ethical ramifications of their actions. The duty of lawmakers is to create legislation that relies not only on technological tools but also on unwavering human judgment. As much as AI can assist in drafting documents and aiding research, it should not replace the probing insights that humanity brings to the table.

The controversy surrounding Minnesota’s law should serve as a pivotal moment, prompting policymakers to reflect deeper on how we integrate AI into critical functions across society. Strong foundations built on factual and integrity-laden frameworks are essential to progress in addressing the deepfake challenge while safeguarding principles of free expression.

The Road Ahead

If the recent controversy has taught us anything, it’s that while AI has a role in our future, it cannot become an unchallenged authority—especially in areas as sensitive as human rights and public trust. The balance between innovation and ethical responsibility must guide our approach to AI technologies. Minnesota’s Anti-Deepfake Law represents a necessary, if imperfect, step toward addressing misinformation. Yet, from this moment of turbulence, there lies an opportunity. An opportunity to refine the law, engage in meaningful dialogues about the purpose of technology, and strengthen our resolve to protect the integrity of our democratic processes.

In the evolving landscape of AI and policy-making, it’s imperative to bring together diverse voices—lawyers, technologists, and ethicists—as we collectively navigate this path. The chorus of opinions will only enhance the quality of the legislation and the underlying rules governing our rapidly changing world. Engaging in collaboration is crucial to ensuring that laws crafted today can serve the best interests of society and protect us from the fallout of misinformation.

The narrative does not end here; it’s just the beginning. Every digital frontier poses challenges and opportunities. With this in mind, we can move forward, keeping in close consideration both the implications of AI and the importance of human oversight. Only then can we hope to flourish in an ecosystem committed to truth, transparency, and collective progress.

As we analyze and discuss this complex issue further, many are left pondering if Minnesota will emerge victorious in its fight against deepfake technology or if the laws aimed at curbing such tactics will themselves become victims of the very misinformation they seek to eradicate. The dialogue continues, and so too does our journey toward a future where technology and humanity work hand in hand.

For those looking to dive deeper into the evolving landscape of AI, misinformation, and legislative frameworks, check out Neyrotex.com for insightful resources and discussions on the impact of technology in our society.